Is There a Fake News Generation?

Helen Lee Bouygues

September 2020

Introduction

Many attribute the rise of fake news and clickbait to young people and their use of social media. CNN recently ran a story titled: ‘“Is ‘fake news’ fooling kids? The answer is yes.”’

But over the past few years, there’s been a growing realization that older Americans are just as likely to fall for fake news, and a few studies have suggested that people over 65 are just as vulnerable to disinformation as younger generations — if not more vulnerable.

This newer research typically examines how people share information on social media sites, and a number of the studies have posted surprising results. For instance, one showed that compared to younger counterparts, “users over 65 shared nearly seven times as many articles from fake news domains.”

In light of recent disinformation campaigns, the Reboot Foundation decided to explore this issue in more depth: How exactly does a person’s age influence their ability to resist clickbait, identify legitimate news headlines, and determine the reliability of websites? To what extent do other factors, like the time spent on social media or political affiliations, influence clickbait preference and internet literacy?

Specifically, this study hoped to measure whether older adults (over 60 years old) are better than younger adults (aged 18-30) at identifying legitimate news headlines. The findings from the research are especially important, given recent developments. For one, a number of studies, including one from Reboot in April 2020, show that social media is a key driver of misinformation about COVID-19.

In addition, the United States has a national election on November 3. During the last election, many blamed disinformation on social media for unduly influencing what turned out to be a narrow win by the country’s current president.

Major Findings

Major Findings

The research resulted in four main findings:

Older Americans prefer clickbait more than younger Americans. Clickbait is a sensational headline, title, or image which, via a link, connects readers to a site containing questionable and, in many cases, false information. This study found that people 60 years of age and older exhibited a significantly larger preference for clickbait than those in the 18-30-year-old group.

To test participants’ preference for clickbait, people sorted groups of headlines. Each group was made up of several clickbait/neutral pairs. Each pair contained a clickbait headline and a neutral headline for the same story. For instance, “What is wrong, Joe Biden? Old Joe Confuses Iraq, Iran, and Ukraine” was a clickbait headline for a story describing a Biden campaign rally. “Biden Misspeaks at Campaign Rally” was the neutral version for the same story.

Across the board, participants preferred clickbait headlines to neutral headlines. Although some participants always preferred the neutral versions of the headline and some people always preferred the clickbait versions of the headline, on average, participants preferred the clickbait headlines over two-thirds of the time.

The difference in clickbait preference between the age groups was clear in the data. The older group preferred the clickbait versions of headlines nearly 81 percent of the time. The younger group preferred clickbait versions closer to 72 percent of the time.

At the same time, the study found that those who spend more hours on social media, whatever the age group, tend to display a reduced preference for clickbait. This effect was small, however, and it’s possible that people who spend many hours on social media simply become habituated to clickbait headlines and exhibit more of a preference for less exaggerated headlines over time.

The more time spent on social media, the worse the user’s news judgment.

cross age, education, and political ideology, there is a significant correlation between frequent social media use and overall news discernment. Put more simply, the more people use social media, the worse their judgment.

This relationship still held true even after excluding “power users,” or those spending more than 10 hours per week on social media. In other words, even among moderate and light social media users, more use meant worse judgment.

Note that distinguishing fake news from legitimate news is different from preferring clickbait. The latter is about what someone wants to read. The former is about what someone believes to be true. These findings suggest that a preference for certain kinds of headlines doesn’t necessarily equate to an endorsement of those headlines as true.

The results uncovered in this study parallels other findings that show people may share articles even when they don’t believe those articles are really true. People share online articles and videos for many reasons: not just to share what they perceive to be true information. Future research might shed more light on these nuances.

It’s important to note that this effect, although statistically significant, was not very large. For instance, the correlation between social media use and news discernment suggests that someone who spends 10 or more hours on social media a week would inaccurately assess about one more headline out of 12 than a less frequent user. Social media use is certainly not the only — or the main — factor explaining variation in news discernment. It’s likely that other, unmeasured variables are playing a role.

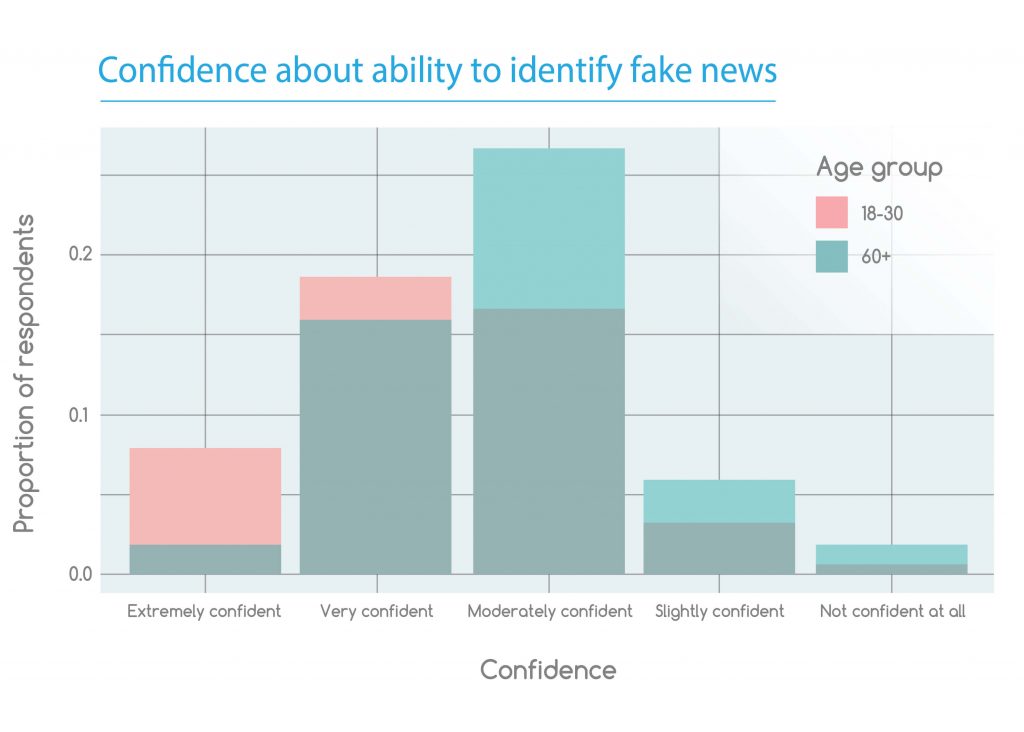

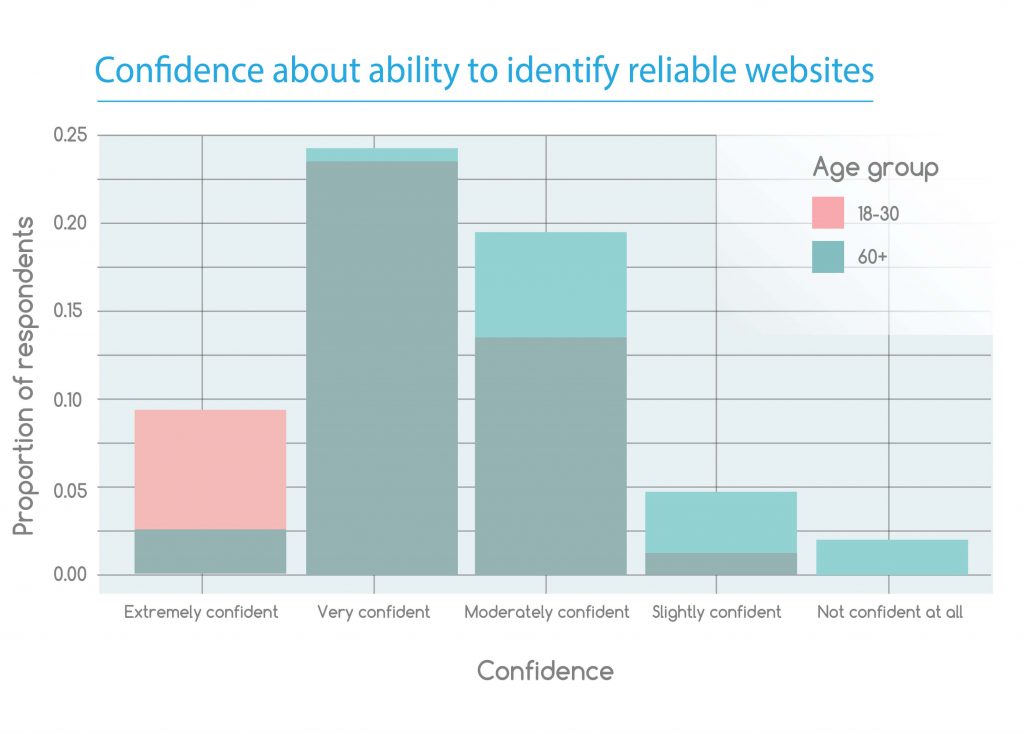

Internet users, especially young people, think they’re skilled at examining information online, but they are not nearly as good at identifying fake news as they believe. A key weakness in navigating information online is over-confidence. If someone believes that they’re good at identifying fake news but they’re not very good at it, they’re likely going to have far more issues with fake news than others who assess their own abilities more accurately.

Most of the study’s participants felt confident about their abilities to critically examine information on the internet. When asked the question “How confident are you in your ability to distinguish between ‘fake’ news reports and legitimate news reports,” the overwhelming majority, young and old, said they were moderately to extremely confident in their ability to do so. The same goes for a similar question about their ability to detect unreliable websites. And yet, most (64 percent of 18-to-30-year-olds and 51 percent of those over 60) failed to determine that the websites were unreliable.

Whatever their levels of confidence, the younger and older age groups offered similar explanations as to why they believed the websites were reliable. The data suggest that both groups judge websites superficially — weighing heavily, for example, whether they provide charts, graphs, and references to studies or maintain a scientific or professional tone. Some participants believed that the nonprofit status of the websites’ hosts and the presence of authors with advanced degrees were enough to establish the sites’ reliability.

As explored further in the next finding, people are overconfident about their media literacy skills, and they believe that they have more skills than they actually do.

For all age groups, determining the reliability of websites is problematic.

Disinformation on the internet isn’t limited to fake news. One technique often used by lobbying groups, for instance, is to create a website that appears to be an authoritative, objective, and independent take on a controversial issue. These websites can give the appearance that the overwhelming weight of scientific authority supports a partisan position even when it doesn’t.

This study aimed to look at issues of age and how they judged such websites. Study participants were asked to visit two such websites — http://www.co2science.org/ and http://www.bisphenol-a.org/ — and determine whether they’re reliable, meaning the information they share is factual and relevant. The websites make scientific claims and appear to be objective, third-party takes on controversial issues (climate change and the use of BPA, or bisphenol A, in plastic and resin products), but they are, in fact, funded by industry groups and mostly outdated.

In the cases of both websites, the best predictor for finding them reliable was party identification. Republicans were more likely to find the sites reliable than Democrats. Otherwise, differences between the age groups were non-existent. This is perhaps expected for the climate change site, since climate change is, in general, a politically divisive topic.

Once again, time spent on social media, no matter what one’s age, played a role in reliability judgments. The effect was only about a fifth the size of political ideology, but the more time people spend on social media, the more likely they will find unreliable websites “reliable.” This is consistent with the idea that excessive social media use impairs people’s ability to critically examine online sources.

To gain more insight into why participants found such websites either reliable or unreliable, we asked them to explain their reasoning. These explanations reveal, at least in a small way, how people determine whether a website is reliable in the first place. but the more time people spend on social media, the more likely they will find unreliable websites “reliable.”

One persistent response was that a “.org” at the end of a website address was a sure sign that a site was reliable (or a sure sign that it was not reliable). Others relied mainly on the fact that the websites could be found by Google or were referenced, in perfunctory ways, by other websites. A number of participants judged the websites solely by whether the website accorded with what they already knew to be true.

While such traits may help build a case for reliability, they alone are not evidence enough, and the Reboot study’s data shows that participants lacked the ability to use more sophisticated indicators of reliability.

A small amount of digging reveals that both websites are funded by industry advocacy or lobbying groups. But less than 10 percent of the study’s participants noted that fact. In addition, neither website has been updated for several years, making any information that may (or may not) have been accurate at the time potentially inaccurate now. Only 4 percent of participants noted this.

In some instances, the websites make logical contradictions — citing studies that suggest the opposite of what the website itself says, for instance, or pointing out a logical fallacy only to make the same logical fallacy themselves. Just 1 percent of the study’s participants noted these inconsistencies.

In some cases, participants also misread the websites they were asked to judge. One, for example, said: “The information contained is probably reliable and factually correct. We have known for years that BPA-filled products are probably not good for humans.” But the website argues the exact opposite — that those same products are safe for humans.

Not all participants responded this way. A few individuals — around 1 percent — acted like true fact-checkers, with one claiming: “I went straight to the domain lookup to see who owns the name. The registrant organization is the American Chemistry Council in Washington DC.” After some digging, the participant, a 62-year-old, found that the council is “a lobbying and policy-shaping organization that campaigned heavily to defeat California’s bill to ban bisphenol A in 2008.” She later stated, “I cannot trust any site that is designed solely to line the pockets of lobbyists and chemical companies.”

Methodology

Survey procedure. The Reboot Foundation designed a survey that evaluated the following abilities and preferences:

- A preference for clickbait headlines

- The ability to distinguish fake news headlines from legitimate news headlines

- The “fluency effect” — the tendency to view previously viewed headlines as more reliable or true than headlines never viewed before

- The ability to determine the reliability of websites

- Meta-cognitive knowledge about participants’ ability to both identify fake news and determine the reliability of websites

The survey was posted on Amazon’s Mechanical Turk and made available to native English speakers in the United States. It asked participants to answer several multiple-choice questions, and the subjects covered included: sorting headlines by preference; evaluating fake news headlines; and visiting outside websites. They were also asked to write short responses on why they found the websites they were asked to review reliable or not reliable.

Survey sample. After data cleaning, the study had 79 respondents in the 60-plus age group and 71 respondents in the 18-30 age group. Among the 60-plus age group, there was a relatively even distribution of self-identified Republicans and Democrats (54 percent to 46 percent, respectively), while the 18-30 age group skewed Democratic (83 percent to 17 percent Republican).

The sample audience was, in general, well-educated. Ninety percent of respondents completed at least some college, and among those, 43 percent earned a college degree, while 18 percent a graduate degree. The participants were also almost evenly split by gender: 47 percent female, 53 percent male.

Part of our goal was to replicate two existing studies in this area. pecifically, our research sought to confirm whether older adults (over 60 years old) are indeed better than younger adults (aged 18-30) at identifying legitimate news headlines. Our research also went further, though, and aimed to test whether older adults would judge headlines they had seen before as more reliable than ones they had only seen once. This research extends research on the fluency effect for fake news headlines to different age groups.

The idea that older people are more likely to fall for fake news has some basis in the science of aging. As people grow older, cognitive skills decline, and some suspect that older internet users are not as savvy at using social media as their younger counterparts.

Discussion

The Reboot study also aimed to measure any differences between 18-30 year-olds and adults aged 60 and older in whether they prefer clickbait versions of headlines. This aspect of the study expands upon research from Italy suggesting that a subset of older adults prefer clickbait headlines over more neutral ones to a greater degree than other age groups.

Finally, this study hoped to measure whether older adults were any better than younger adults at determining whether a website was reliable, extending research performed only on a young adult population to an older adult population. Our aim was to measure whether older or younger adults were better assessors of their own skills. This would replicate earlier research on metacognition in identifying legitimate news headlines and extend existing research on determining the reliability of websites to older populations.

Conclusion

These findings underscore two critical take-aways. First, media literacy is not just about teaching children — it’s about all Americans becoming more savvy, critical news consumers. To be sure, the Reboot Foundation believes that media literacy programs should be universal in K-12 schools and that every student should graduate high school equipped with key media literacy skills.

But programs for adults are vital, too. Libraries are a natural place to teach media literacy skills to adults, and some libraries are doing just that. These kinds of programs should be the rule, not the exception.

Second, the media literacy crisis is not just about the fake news headlines that pop up on social media feeds. As others have argued, it’s about a variety of forms of disinformation. The finding in this study that many participants confidently believe that a “.org” domain automatically makes a website reliable (or automatically makes it not reliable) is troubling. Many of our participants were convinced by long lists of citations or by scientific-looking charts and graphs.

Whether someone ultimately concludes that a website run by an industry-backed lobbying group is reliable or not is up to them. What’s important is that individuals look beyond superficial features of reliability to realize that whether a website actually is an industry-backed website, whether the information is (or isn’t) out-of-date, whether the experts cited actually are authorities in a relevant field, etc.

Being a savvy news consumer isn’t easy. It’s hard. But that’s the point, and the best way to engage in this point is to train people, young and old alike, in media literacy.

Table of ContentsIntroductionMajor FindingsMethodologyDiscussionConclusion